MarioNETte: Few-shot Face Reenactment Preserving Identity of Unseen Targets

- Sungjoo Ha, Martin Kersner, Beomsu Kim, Seokjun Seo, Dongyoung Kim

- AAAI, 2020

- [Project Page], [Paper]

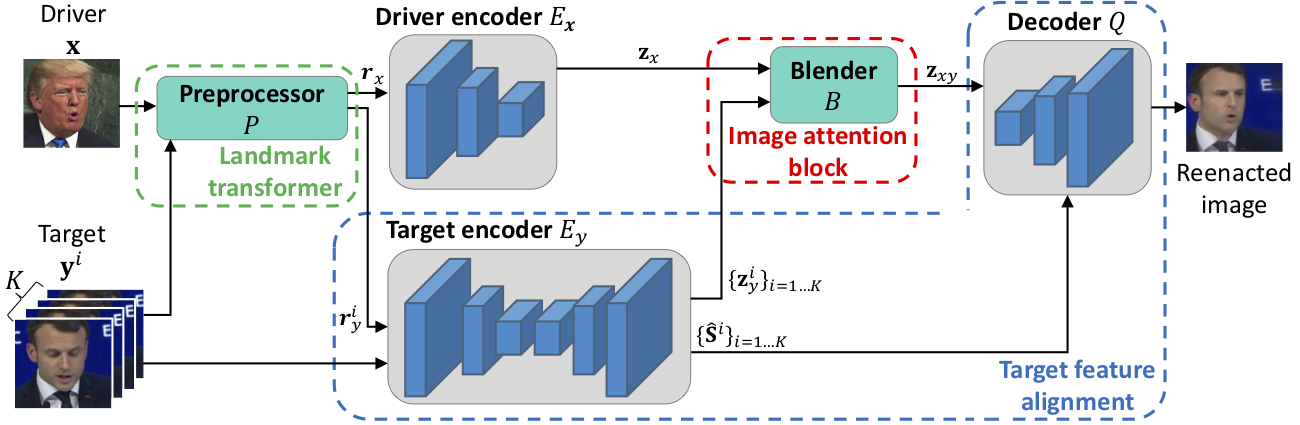

When there is a mismatch between the target identity and the driver identity, face reenactment suffers severe degradation in the quality of the result, especially in a few-shot setting. The identity preservation problem, where the model loses the detailed information of the target leading to a defective output, is the most common failure mode. The problem has several potential sources such as the identity of the driver leaking due to the identity mismatch, or dealing with unseen large poses. To overcome such problems, we introduce components that address the mentioned problem: image attention block, target feature alignment, and landmark transformer. Through attending and warping the relevant features, the proposed architecture, called MarioNETte, produces high-quality reenactments of unseen identities in a few-shot setting. In addition, the landmark transformer dramatically alleviates the identity preservation problem by isolating the expression geometry through landmark disentanglement. Comprehensive experiments are performed to verify that the proposed framework can generate highly realistic faces, outperforming all other baselines, even under a significant mismatch of facial characteristics between the target and the driver.

Here's a summary of the work. We can perform face reenactments under a few-shot or even a one-shot setting, where only a single target face image is provided. Previous approaches to face reenactments had a hard time preserving the identity of the target and tried to avoid the problem through fine-tuning or choosing a driver that does not diverge too much from the target. We tried to tackle this "identity preservation problem" through several novel components.

Instead of working with a spatial-agnostic representation of a driver or a target, we encode the style information to a spatial-information preserving representation. This allows us to maintain the details that easily get lost when utilizing spatial-agnostic representation such as those attained from AdaIN layers.

We proposed image attention blocks and feature alignment modules to attend to a specific location and warp feature-level information as well. Combining attention and flow allows us to naturally deal with multiple target images, making the proposed model to gracefully handle one-shot and few-shot settings without resorting to reductions such as sum/max/average pooling.

Another part of the contribution is the landmark transformer, where we alleviate the identity preservation problem even further. When the driver’s landmark differs a lot from that of the target, the reenacted face tends to resemble the driver’s facial characteristics. Landmark transformer disentangles the identity and expression and can be trained in an unsupervised fashion.